Summary of Responses from the Learning Policy Institute and Linda Darling-Hammond to Questions from The 74

The following is from Linda Darling-Hammond and the Learning Policy Institute in response to questions for a story in The 74, raising questions about some of Darling-Hammond’s research and public statements.

Statement from The 74: Challenges the “use of state-level NAEP data to suggest that New Orleans charter-based reforms are ineffective.” (p. 43)

Response from Dr. Darling-Hammond: We did not cite the study that used state-level NAEP data to draw inferences specifically about New Orleans, nor did we declare that the “New Orleans charter-based reforms are ineffective.” We noted the difficulty of creating a comparison group for New Orleans, given the changes in the population after the hurricane, and we reported the results of several studies, including those that, using different comparison groups, reported positive and negative conclusions about charter schools in New Orleans and Louisiana to conclude that the jury is out with respect to the overall achievement effects of the reform strategy in the state and city. Our conclusion to that section of the report states: “Thus, different studies using different kinds of comparison groups come to very different findings.” (p. 43)

Statement from The 74: Statement about testing: “What we see from a lot of research is that the kinds of standardized tests that we use [in the United States] predict very little about success later in life. They don't add anything really at all to predicting college success and nothing about life success." (statement around 7:30 in the video)

Response from Dr. Darling-Hammond: In the context of the speech, the statement was aimed at multiple-choice tests used to predict college success and later life success. Decades of studies have found that most such standardized tests, like the SAT and ACT, have less predictive value than course-taking and class rank for predicting college success. Examples include:

-

A study on one of the most recent such studies, which found that ACT and SAT tests add no predictive information beyond high school course-taking, grades, and other activities used for admissions, can be found here.

-

Here is a study from the University of California system finding that high school grades are the strongest predictors of college success and become stronger predictors throughout the years of college, even after the freshman year.

-

Another College Board study looking at the predictive validity of the SAT found, as most such studies do, that high school GPA is the strongest predictor of early success in college and that only the writing section of the SAT came close to having as much predictive power. See here. The stronger value of assessments that include authentic performance, such as written analyses, was part of the main point in the speech that was quoted.

One of the studies cited by LPI does really address the question of whether multiple-choice standardized tests are good predictors.

-

The study here does not really look at the effects of student test scores on later life. It looks at the effects of teachers’ “value-added” on student earnings later. While scores are a factor in determining teachers’ value-added, the level of a teacher’s value-added metric is not directly related to the absolute measure of student scores. In fact, this analysis shows that VAM is frequently greater for teachers whose students have relatively lower scores in the middle of the band than for teachers whose students have the highest scores.

Statement from The 74: Challenges the claim: “Federal policy under No Child Left Behind (NCLB) and the Department of Education's 'flexibility' waivers has sought to address this problem by beefing up testing policies—requiring more tests and upping the consequences for poor results, including denying diplomas to students, firing teachers, and closing schools. Unfortunately, this strategy hasn't worked. In fact, U.S. performance on the Program for International Student Assessment (PISA) declined in every subject area between 2000 and 2012— the years in which these policies have been in effect.” (Similar claims made here.)

-

Raw PISA data would not allow for researchers to isolate the impact of specific policies, such as test-based accountability.

-

A review of international test scores released by the Economic Policy Institute found that disadvantaged students have made large gains on PISA and TIMMS tests in recent years.

-

The same study argued, “It is extremely difficult to learn how to improve U.S. education from international test comparisons” and instead they examine what policies are associated with national (low-stakes) test scores gains in the United States. “We … found that students in states with stronger accountability systems do better on the NAEP math test, even though that test is not directly linked to the tests used to evaluate students within states,” they wrote.

Response from Dr. Darling-Hammond: The first statement is true—no raw data isolates the impact of a single policy, but it proves the point that if other factors—such as growing poverty, school funding disparities or other factors that are discussed in the EPI study—are responsible for the overall declines, policy should be addressing those factors to make a difference in overall performance. The authors of the EPI study do not claim that the federal NCLB was a successful policy. They draw attention to different strategies used by states when they evaluate differences across states. Their definition of accountability systems is a different definition than that used by NCLB. If the federal policy were successful, these cross-state differences would not matter and perhaps would not exist. In fact, the differences between states like New Jersey (which showed positive outcomes) and New York (which showed negative outcomes, relative to NJ), cited by these authors are not caused by high-stakes testing, as New York (the lower scorer) engages in much more extensive high-stakes testing than New Jersey.

-

A synthesis of research by Susanna Loeb and David Figlio found, “The preponderance of evidence suggests positive effects of the accountability movement in the United States during the 1990s and early 2000s on student achievement, especially in math.”

The Loeb and Figlio study was referring to accountability strategies in the 1990s and early 2000s that preceded NCLB, which were more thoughtful than the NCLB approach (more focused on improvement, rather than sanctions, and more informed by performance-based assessments which many states used at that time) and produced stronger results, as noted above. NAEP gains were much greater in the decade before NCLB than in the decade after it had taken effect.

-

Research on No Child Left Behind has generally found that the law improved student achievement on low-stakes tests, particularly in math. See here, here, and here.

The first of these studies, by Thomas Dee, notes the gains in mathematics on NAEP, but does not note that these gains were about half as large as the gains that occurred in the decade which preceded NCLB. Dee and his co-authors do note that “we find no evidence that NCLB increased reading achievement in either 4th or 8th grade.” Further, a study by RAND found that PISA measures higher order skills to a much greater extent than NAEP does, especially in math.

The second study provides an interesting discussion of a proposed methodology but it doesn’t actually prove the point for which it is cited above. The third study focuses on a subset of schools deemed to be on the margin of failing AYP, not the overall education system.

Statement from The 74: Researchers Dan Goldhaber and Jim Wyckoff said there is very little evidence that traditionally prepared teachers systematically outperform other teachers by significant margins. Wyckoff said, “What evidence exists on the horse race suggests that in the schools where we observe both [alternatively certified] and [traditionally prepared] teachers there appears to be small differences at best. The big result is that the distributions appear to largely overlap.” Research listed: here, here, here, here, here

Response from Dr. Darling-Hammond: The term “substandard” is a straightforward description of how teachers are classified in the certification system. Those who hold less than full credentials because they have not met all of the state’s standards for a license are classified as holding substandard credentials or permits. Similarly, by definition, teachers who have not completed the preparation required for full certification are considered underprepared. By using these descriptive terms, we are not making a claim about effectiveness. However, there is also research demonstrating that less-than-fully-prepared teachers are less effective.

With respect to effectiveness of newly entering teachers and their influences on student achievement, the most productive comparison is not between veteran teachers who entered through different traditional routes, but rather between those who have not completed their preparation (whether through a traditional or an alternative route) and those who have. In many states, alternative route candidates, including those who enter through Teach for America (TFA) ultimately complete all the coursework for certification as traditionally prepared entrants, but they do so over one to two years, while teaching, depending on the state and the program. Various studies have contrasted the effectiveness of less-than-fully-prepared teachers, as measured by student achievement, with the results achieved by fully certified teachers. As a group, these studies have found that the students of teachers who have not had preparation or who have not completed their training programs (and may be enrolled in alternative routes to certification) do significantly less well in reading and math than students of new, fully certified teachers, with the negative effects usually most pronounced in elementary grades and in reading. Some of these studies are the ones cited by The 74 in support of the opposite claim. For example:

-

Henry et al. 2014 examines teachers in their first three years of teaching in North Carolina and finds that the 15,026 teachers who entered the classroom before completing their full credentials through the state’s alternate entry pathway showed a significant negative effect on student achievement in math and science. The 466 teachers (3% of the alternate entry pool) who entered through Teach for America had more positive outcomes. However, the estimates include those who have completed their preparation, so they don’t address the question about whether those still in training are as effective as those who have completed their preparation.

-

Kane, Rockoff, and Staiger compared entrants into New York City schools by different categories of initial pathway and certification status.1 Like the Boyd et al., study we describe below, this study found that, in math and reading, students of first-year teachers who were not yet fully prepared (those from NY Teaching Fellows, Teach for America, and others who had not yet been prepared) did less well than those of first-year teachers who were “regularly certified.” This was true even though the authors’ method minimized the differential effect of teacher preparation by including teachers licensed through “transcript review” and temporary permits in the same group as pre-service teacher education program-prepared teachers. It was not until later years, after the teachers had completed more preparation, that the alternative entrants demonstrated comparable outcomes on some measures.

-

The Clark et al., 2013 study looks at the comparison of Teach for America and The New Teachers Project (TNTP) Teaching Fellows to other secondary mathematics teachers. This comparison does not address the relative effectiveness of teachers who are fully prepared vs. those who are less-than-fully prepared, because the comparison teachers also included those who were participating in or had completed an alternative route to certification along with those who completed a traditional route to certification. The group of teachers compared to TFA teachers included 41 percent who came through an alternative route. Among the group of teachers compared to the TNTP sample, 27 percent were alternatively certified. Furthermore, TFA and TNTP teachers included those who had finished their training and become fully certified as well as some who had not. So the comparison was not designed to reveal what the differences would be between not-yet-prepared and fully prepared teachers, or alternatively prepared and traditionally prepared teachers.

Beyond the fact that these studies do not address the question of whether fully prepared vs. less-than-fully prepared teachers are equally effective, there are many studies that have looked at this question directly and find that fully prepared teachers are generally more effective. A small sample of these include:

-

A study of teachers in North Carolina high schools by economists at Duke University found that among the greatest negative influences on students’ achievement was having a new teacher who entered teaching through an alternative certification pathway requiring no initial teacher preparation. The authors concluded: “We find compelling evidence that teacher credentials affect student achievement in systematic ways and that the magnitudes are large enough to be policy relevant. As a result, the uneven distribution of teacher credentials by race and socio-economic status of high school students—a pattern we also documented—contributes to achievement gaps in high school.”2

-

A large-scale study using data from Houston, Texas, on more than 132,000 students and 4,400 teachers in grades 4-5 over a six-year period in six reading and mathematics achievement tests found that fully certified teachers consistently produced significantly stronger student achievement gains than uncertified teachers or those participating in alternative routes, including TFA teachers, on all six tests.3 Having an under-certified teacher depressed student achievement by up to three months annually as compared to having a fully certified teacher with the same experience working in a similar school. Preparation made a difference: Many alternate route teachers, including TFA teachers, became as effective as preservice-trained teachers after they finished their preparation, if they stayed in the profession. More than 80 percent of TFA grads left by their fourth year.

-

Another New York study by Boyd et al., examined the effectiveness of 3,766 new teachers who entered teaching in grades 4-8 in New York City through different pathways. The study found that elementary grade students of teachers who entered through alternate pathways, including new TFA recruits and Teaching Fellows, achieved significantly lower gains on tests in Reading/Language Arts and mathematics than students of new teachers who had entered after graduating from pre-service teacher education programs.4 Again, for the relatively small proportion of TFA and Teaching Fellows recruits who stayed, effectiveness improved after preparation was completed.

-

A study comparing student achievement in five low-income school districts in Arizona found that the students of fully certified teachers significantly outperformed students of matched comparison teachers who were less-than-fully-certified—including Teach for America recruits – on all three sub-tests of the SAT-9 —Reading, Mathematics and Language Arts.5

Statement from The 74: Claim that the Talent Transfer Initiative study suggests that “combat pay” is ineffective. (p.21) Research found that the Talent Transfer Initiative improved student achievement and teacher retention while the incentive was paid.

LPI Response: The actual quotation in LPI’s study, Addressing California’s Emerging Teacher Shortage, does not state that “combat pay” is ineffective. It also accurately represents the Talent Transfer Initiative results — and the broader finding about long-term retention impacts, from that 2013 study by the Institute of Education Sciences, “Transfer Incentives for High-Performing Teachers: Final Results from a Multisite Randomized Experiment.”

The actual quote from LPI’s California Supply & Demand Study states:

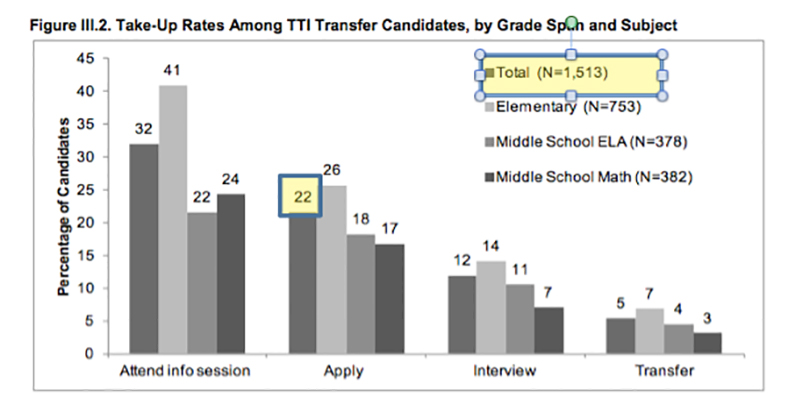

“Some policies have emphasized monetary bonuses or “combat pay” to attract teachers to high-need schools. However, the evidence shows that investments in professional working conditions and supports for teacher learning are more effective than offering bonuses for teachers to go to dysfunctional schools that are structured to remain that way… A more recent study of efforts to recruit high-performing teachers to struggling schools found that, among 1,500 such teachers in the Talent Transfer Initiative, only 22 percent were willing to apply to transfer to high-need schools for a two-year bonus of $20,000. Although the targeted teachers filled most of the 81 vacancies, attrition rates of these teachers soared to 40 percent after the bonuses were paid out.”

Every aspect of this quotation is supported with the data below from the Transfer Incentives study, as is the broader finding that such incentives do not provide long-term solutions to the problems, as their benefits had disappeared by year two of the program.

Quotes / Findings from the report, “Transfer Incentives for High-Performing Teachers: Final Results from a Multisite Randomized Experiment.”

“To gauge the response of the candidates in the TTI, we examined the rates at which candidates took part in various phases of the process (“take-up rates”) from attending information sessions to completing an application, interviewing, and ultimately transferring. In Figure III.2, we provide a breakdown of the take-up rates by grade span and subject using TTI program records.” (p.34)

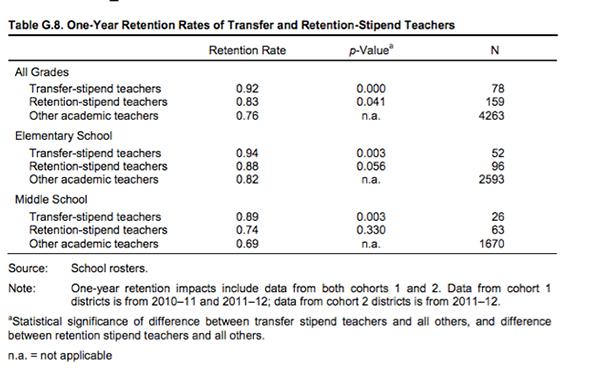

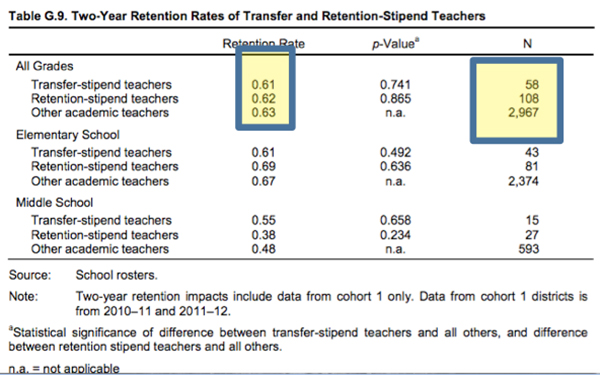

“Teachers receiving transfer or retention stipends through TTI had a higher school retention rate between program years 1 and 2 than teachers not receiving stipends (see Table G.8)…. Two-year retention was measured in the year after the completion of the program, after both the transfer and retention stipends had ended. There are no significant differences in two-year retention rates between stipend and non-stipend teachers” (p. G.13 & G.14). Table G9 below shows that two-year retention rates were 61% for transfer-stipend teachers and 62% for retention-stipend teachers. They were 63% for non-stipend teachers.

Teaching Conditions and Retention: The statement in LPI’s report that “evidence show[ing] that investments in professional working conditions and supports for teacher learning are more effective than offering bonuses for teachers to go to dysfunctional schools,” is supported, among others, by studies from Harvard’s Project on the Next Generation of Teachers, including:

-

A study by Kraft et al., (2012)6 found that teachers leave high-poverty, urban schools not because of the students, but most often because these schools fail to provide “instructional supports, an orderly environment and extra assistance for students.”7 The authors note that while many “researchers and policymakers have interpreted the transfer and exit patterns from [high-poverty urban] schools as evidence that teachers prefer not to work with low-income and minority students,” their “findings challenge the…assumptions inherent in policies that focus on school assignment rather than organizational improvement…and… suggest that policy solutions designed to attract and retain effective teachers by compensating them financially for teaching low-income students probably address the wrong problem.”8

-

A recent paper by Simon and Johnson (2015)9 reviews six rigorous studies published within the last decade that have found that teacher turnover is most strongly related to “poor working conditions that make it difficult for them to teach and for their students to learn.”10 The authors’ analysis of the factors that influence teacher departures across the six studies concludes that “[a]lthough factors such as salary and work hours matter to teachers, working conditions that are social in nature likely supersede marginal improvements to pay or teaching schedules in importance.”11

-

Johnson et al. (2012) combine a statewide survey of school working conditions with demographic and student achievement data from Massachusetts to examine job satisfaction. When they compare teachers who teach in schools of the same size and type that serve the same types of students and are subject to the same district policies and salary scale, the context of work “is a much stronger predictor of job satisfaction than all other characteristics combined.” The authors conclude that “guaranteeing an effective teacher for all students—especially minority students who live in poverty—cannot be accomplished simply by offering financial bonuses or mandating the reassignment of effective teachers. Rather, if the school is known to be a supportive and productive workplace, good teachers will come, they will stay, and their students will learn.”12

-

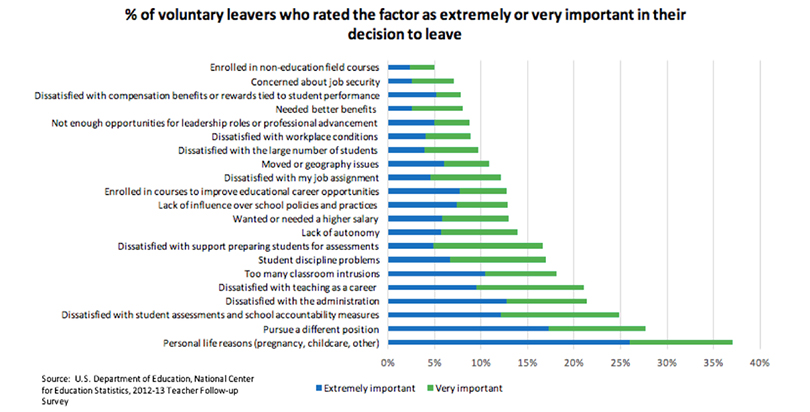

The most recent nationally representative surveys — the Schools and Staffing (SASS) and Teacher Follow-Up Surveys (TFS) of current and former elementary, middle and high school teachers — from 2012-13 highlight the factors contributing to teachers’ decisions to leave the profession, suggesting that factors related to workplace conditions contribute more frequently to teachers’ decisions to leave the profession than salary. For example, dissatisfaction with school accountability, with the administration, and with the support for preparing students for assessments were cited more frequently than salaries as factors that were “extremely” or “very important” in teachers’ decisions to leave the profession.13 A summary of the results is in the figure below.

1. Thomas J Kane, Jonah E. Rockoff & Douglas O. Staiger, What does certification tell us about teacher effectiveness? Evidence from New York City. Working Paper 11844, Cambridge, Mass.: National Bureau of Economic Research, March 2006, available at http://gseweb.harvard.edu/news/features/kane/nycfellowsmarch2006.pdf.

2. Charles T. Clotfelter, Helen F. Ladd, and Jacob L. Vigdor, “Teacher Credentials and Student Achievement in High School,” The Journal of Human Resources 45, no. 3 (2010): 655–81, doi:10.1016/j.econedurev.2007.10.002.

3. E.g., Linda Darling-Hammond, Gatlin, Su Jin Jez, & Julian Vasquez Heilig, Does Teacher Preparation Matter? Evidence about Teacher Certification, Teach for America, and Teacher Effectiveness, 13 Educ. Policy Analysis Archives, (Oct. 2005) at 2-3, available at http://epaa.asu.edu/epaa/v13n42/v13n42.pdf (hereinafter Darling-Hammond 2005).

4. Donald Boyd, et al., How Changes in Entry Requirements Alter the Teacher Workforce and Affect Student Achievement, November 2005, available at http://www.teacherpolicyresearch.org/portals/1/pdfs/how_changes_in_entry_requirements_alter_the_teacher_workforce.pdf.

5. Ildiko Laczko-Kerr & David C. Berliner, The Effectiveness of “Teach for America” and Other Under-certified Teachers on Student Academic Achievement: A Case of Harmful Public Policy, 10 Educ. Policy Analysis Archives (Sept. 6, 2002), available at http://epaa.asu.edu/epaa/v10n37/.

6. Matthew A. Kraft et al., “Committed to Their Students but in Need of Support: How School Context Influences Teacher Turnover in High Poverty, Urban Schools,” Project on the Next Generation of Teachers, 2012, doi:10.1007/s13398-014-0173-7.2.

7. Matthew A. Kraft et al., “Committed to Their Students but in Need of Support: How School Context Influences Teacher Turnover in High Poverty, Urban Schools,” Project on the Next Generation of Teachers, 2012, doi:10.1007/s13398-014-0173-7.2.

8. Matthew A. Kraft et al., “Committed to Their Students but in Need of Support: How School Context Influences Teacher Turnover in High Poverty, Urban Schools,” Project on the Next Generation of Teachers, 2012, doi:10.1007/s13398-014-0173-7.2.

9. Nicole S. Simon and Susan Moore Johnson, “Teacher Turnover in High-Poverty Schools: What we know and can do,” Teachers College Record 117, no. 3 (2015): 1-36, 10.

10. Nicole S. Simon and Susan Moore Johnson, “Teacher Turnover in High-Poverty Schools: What we know and can do,” Teachers College Record 117, no. 3 (2015): 1-36, 1.

11. Nicole S. Simon and Susan Moore Johnson, “Teacher Turnover in High-Poverty Schools: What we know and can do,” Teachers College Record 117, no. 3 (2015): 1-36, 27.

Help fund stories like this. Donate now!

;)