Analysis — One Big Takeaway From Two New Investigations About Real Estate and School Ratings: You Can’t Tell a Good School Without Measuring Student Growth

Updated Dec. 17

Two recent stories about real estate and school rankings set off discussion over how to properly identify quality schools. What these debates show is the importance of tracking how well a school actually educates the children it has — that is, measuring how much students grow over time.

One of the stories, a Chalkbeat investigation, concluded that the school rating algorithm used by GreatSchools.org helped push families toward more segregated schools. The other, a three-year investigation of unequal treatment by real estate agents on Long Island, caught some suggesting to homebuyers, “Follow the school bus, see the moms that are hanging out on the corners.”

Both stories reflect on the way rankings and perceptions define school quality. But this definition has more to do with the composition of a school’s student body than the school’s ability to educate those students. And while the school bus comment is at worst racist and at best classist, it’s also wrong when it comes to school quality.

We’ve seen this in other settings. Families clamor for seats at heavily oversubscribed exam schools in Boston, New York City and Chicago, even as research suggests that the schools, including the gifted class of peers who attend them, make no difference to the students who eventually enroll. Some, but not all, of these studies have found that low-income, black and Hispanic students receive a value from enrolling at these schools, but they’re largely blocked from doing so because of the overwhelming competition to get in. Even if we could make admissions rules more equitable, these types of schools would still suffer from an “elite illusion” that infects our perceptions of school quality.

Policymakers can make the same mistakes. Under then-Mayor Cory Booker, Newark created more charter schools, closed some underperforming traditional public schools and adopted a new teacher evaluation system. Researchers have found that the Newark reforms worked — the city outperformed the rest of the state in subsequent years, and more students were attending more high-performing schools. But the reforms didn’t work immediately; they took time to take effect and show some gains as students made academic growth.

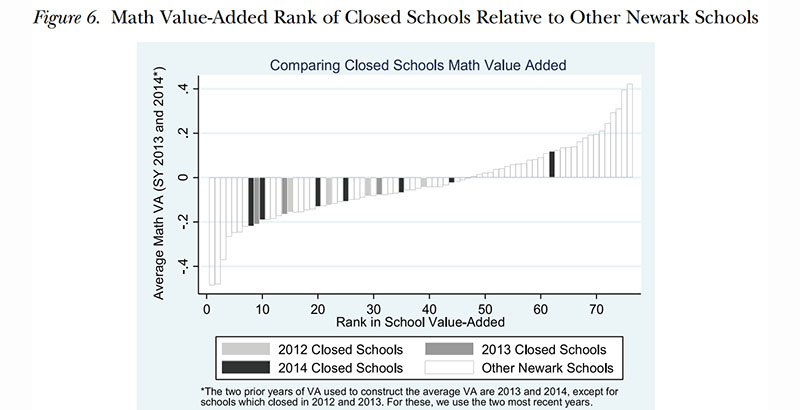

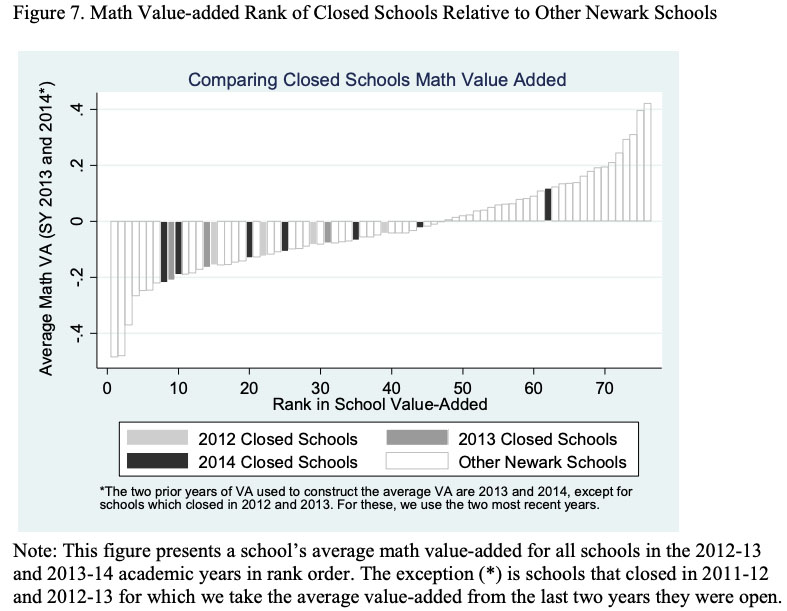

We can’t say for certain what caused the delay, but take a look at a graphic from the NBER research paper analyzing the Newark reforms. It shows which schools Newark closed, based on the schools’ success at improving student test scores in math. But importantly, closure decisions were made on the basis of enrollment and student achievement rates, not student growth. There are geographic factors that don’t show up in a ranking like this, but it’s hard not to conclude that Newark closed some of the “wrong” schools. On average, the closed schools had lower value-added scores than the rest of the district, but at least one school was above the citywide average, and Newark did not close the worst of the worst schools.

It’s possible, and even likely, that other factors were at play here, such as facilities or location, but there’s no question that Newark’s closure decisions were not fully optimized in terms of student growth.

The only way out of this conundrum is for states and districts to take the lead in measuring student growth rates and embedding them in a more prominent way in school rating systems. For example, the Office of the State Superintendent of Education in Washington, D.C., has run the numbers on what its “STAR” accountability system would look like both with and without growth. It concludes:

“…schools at the lower end of the STAR rating distribution tend to benefit from the inclusion of growth metrics, whereas schools at the upper end of the STAR rating distribution would tend to benefit from the exclusion of growth metrics.”

That is, the D.C. school ratings would have the same problems as the real estate agents on Long Island and the reformers in Newark if they didn’t include student growth. The D.C. example is a reminder that if we don’t measure student growth, we’ll have the wrong definition of school quality. Worse, we’ll favor student populations that are already doing well, while harshly judging schools that actually help students learn the most.

Chad Aldeman is a senior associate partner at Bellwether Education Partners and the editor of TeacherPensions.org.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

;)