Stanford professor emeritus Linda Darling-Hammond was recently named the most influential education scholar in the country — and for good reason. She just started an education think tank, the

Learning Policy Institute; she is regularly quoted in the media on a range of education topics and she commonly appears on education panels. She was a member of Obama’s presidential transition team, was

rumored to be in the running for secretary of education in 2008, and — with a new president coming — may very well be considered for that job again.

In short, Darling-Hammond’s credentials are impeccable and her influence is broad.

But my review of Darling-Hammond’s recent research, public statements, and opinion writing calls into question some of her claims on hot-button issues including testing, teacher quality and charter schools in New Orleans.

Through a spokesperson, Darling-Hammond and colleagues provided a thorough and thoughtful response to my criticisms. I reference and quote parts of her reply throughout this piece, and we’ve published the response in its entirety here. I encourage all readers to take a look.

New Orleans evidence

Darling-Hammond recently co-wrote a study, published through the Stanford Center for Opportunity Policy in Education, that was generally critical of

school reform efforts in New Orleans, where the vast majority of schools are now charters. The research raises a number of important issues related to how students experience the choice system in the city.

However, the study makes problematic claims about student outcomes. The authors cite an (unpublished) report1 that uses National Assessment of Educational Progress (NAEP) data to find that Louisiana “charter school students performed between two to three standard deviations lower than comparable public school students in district-run schools.”

Darling-Hammond and co-authors also claim, “NAEP data show that, despite having far more charter activity than any other state, Louisiana continues to be one of the lowest-performing states in the U.S.”

The report concludes, “Studies on the effects of the New Orleans reforms on student achievement vary substantially in their conclusions based on the comparisons used.”

But Tulane University economist Doug Harris, who has extensively studied2 New Orleans schools, said in an email, “Unfortunately, [NAEP] cannot provide evidence about the effectiveness of the New Orleans charter-based reforms. The NAEP data force us to compare all charter schools in the state, most of which are in New Orleans, to all public schools in the state, almost none of which are in New Orleans. It's not a valid comparison.“

Darling-Hammond’s report does not include a study by MIT researchers showing that students in schools taken over by charters experienced large achievement gains.

Harris also explained, “The NAEP data aren't useful and all the other signs on high school graduation and college entry point, so far, in a positive direction. We still have more analysis to do, especially on long-term outcomes, but what's remarkable so far about New Orleans is how consistently positive the results seem across a wide range of metrics.”

This is not simply an instance of different studies reaching different conclusions. Using raw NAEP data to make assertions about specific policies, as Darling-Hammond and coauthors have done, is widely seen by

researchers as entirely

inappropriate for making causal inferences. Using state-level NAEP data to shed light on city-level reforms is doubly wrong.

Darling-Hammond and colleagues dispute this characterization of the report, writing, “We did not cite the study that used state-level NAEP data to draw inferences specifically about New Orleans, nor did we declare that the ‘New Orleans charter-based reforms are ineffective.’” I encourage readers to take a look at the study, particularly pages 41–43, to judge for themselves.

Standardized tests do (imperfectly) predict life outcomes

At an American Federation of Teachers conference last year, Darling-Hammond asserted,

3 “What we see from a lot of research is that the kinds of standardized tests that we use [in the United States] predict very little about success later in life. They don't add anything really at all to predicting college success and nothing about life success."

But contrary to Darling-Hammond’s claim to “a lot of research,” the evidence does not seem to support such broad pronouncements.

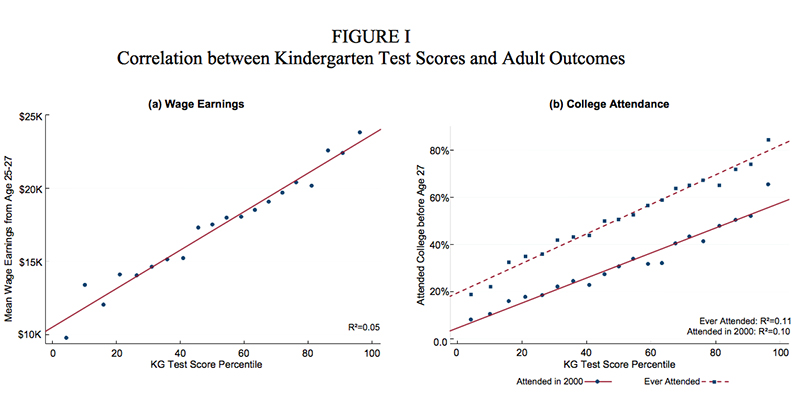

In an email, Jonah Rockoff, an education researcher at Columbia University said, “Obviously standardized tests predict later life outcomes.” He pointed to a study showing that standardized multiple choice test scores as early as kindergarten were associated with college attendance and income as an adult. Rockoff has also co-authored

research showing that teacher impacts on student’s standardized test scores were related to outcomes including earnings, teen pregnancy rates, and college attendance.

Source: “How Does Your Kindergarten Classroom Affect Your Earnings? Evidence From Project STAR,” National Bureau of Economic Research

Morgan Polikoff, a University of Southern California professor, agreed. In an email, speaking generally about the accuracy of testing, he wrote, “While everyone acknowledges that standardized tests don't measure everything we care about, there's a good deal of research showing that these tests do predict important long-term outcomes.”

Other research has found that policies like class-size reduction and

school accountability produce test scores gains that also show up in long-run outcomes such as income and educational attainment. Certainly skills not measured by tests

matter a

great deal, perhaps even more than test outcomes. But many of these

studies focusing on non-cognitive skills also show that standardized tests score matter.

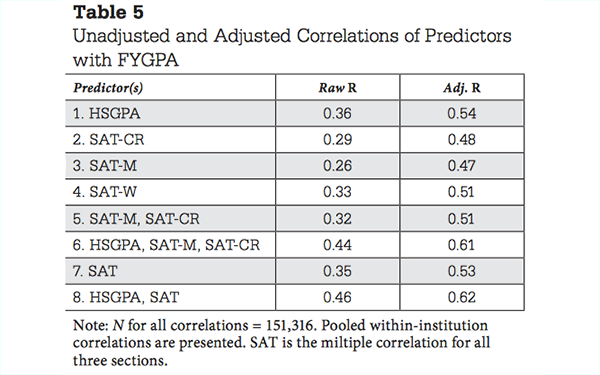

Darling-Hammond points out in her response that high school GPA predicts college GPA marginally better than SAT or ACT tests scores, but even the

studies she cites suggest that test scores do in fact predict college outcomes to some extent.

Source: “Validity of the SAT for Predicting First-Year College Grade Point Average,” The College Board FYGPA = first-year college GPA HSGPA = high-school GPA

Sweeping generalizations based on limited data

In a piece for The Huffington Post, Darling-Hammond claims, “Federal policy under No Child Left Behind (NCLB) and the Department of Education's 'flexibility' waivers has sought to address this problem [of the achievement gap and lagging international test scores] by beefing up testing policies — requiring more tests and upping the consequences for poor results: including denying diplomas to students, firing teachers, and closing schools. Unfortunately, this strategy hasn't worked. In fact, U.S. performance on the Program for International Student Assessment (PISA) declined in every subject area between 2000 and 2012 — the years in which these policies have been in effect.”

Darling-Hammond has decried test-based accountability policies as amounting to “test-and-punish” and has

repeatedly used PISA data to criticize school reform policies.

These are broad claims based on data that should not be used for this purpose, however. Here’s why: To assess specific policies, like those Darling-Hammond is criticizing, it’s crucial to isolate the impact of those policies.

Take this example: For years some have claimed that

supposedly flat national test data shows spending more money on education has not improved outcomes. However,

rigorous research strongly suggests that, when isolating their impact, more resources make a big difference.

Similarly, a variety of factors affect PISA scores — including all the policies in schools and everything outside of schools that impacts student achievement — so looking at trend data to judge specific policies is not appropriate. Efforts to isolate the effects of test-based accountability broadly and

No Child Left Behind specifically have generally found positive results, particularly for math, even when looking at rigorous low-stakes national tests.

Certainly there have been unintended consequences of test-based accountability, but as a careful

review of the research by Stanford’s Susanna Loeb and Northwestern’s David Figlio concluded, “The preponderance of evidence suggests positive effects of the accountability movement in the United States during the 1990s and early 2000s on student achievement, especially in math.” Stanford professor Tom Dee previously

told The 74 that No Child Left Behind had produced “meaningful, important, and cost-effective improvements.”

Irrespective of the methodological problems with using PISA scores to evaluate specific policies, describing the scores as flat or declining masks important differences between student groups. A recent study by Martin Carnoy, Emma García, and Tatiana Khavenson for the Economic Policy Institute found that disadvantaged American students have made large gains on international tests in the recent decade that were hidden partially by the fact that more advantaged students had slid backwards.

On another international test, the Trends in International Mathematics and Science Study, the report also found significant gains among disadvantaged students in several states. While there needs to be a discussion about falling achievement among advantaged students, there should also be an acknowledgement of gains made by those who are less advantaged.

Carnoy, Garcia, and Khavenson also point out that “It is extremely difficult to learn how to improve U.S. education from international test comparisons” and instead they examine policies associated with national test scores gains in the United States. “We … found that students in states with stronger accountability systems do better on the NAEP math test, even though that test is not directly linked to the tests used to evaluate students within states,” they wrote.

Darling-Hammond and colleagues respond in part, “No raw data isolates the impact of a single policy, but it proves the point that if other factors — such as growing poverty, school funding disparities or other factors that are discussed in the [Economic Policy Institute] study — are responsible for the overall declines, policy should be addressing those factors to make a difference in overall performance.”

I would just say that addressing poverty and implementing (thoughtful) test-based accountability are not whatsoever at odds, and in my view policymakers should do both. Again, I strongly encourage readers to review Darling-Hammond’s entire reply on this point, in which she raises some important limitations about the research I cite.

Research mixed on alternative teaching routes

A recent policy paper, issued through the Learning Policy Institute, and written by Darling-Hammond, Roberta Furger, Patrick Shields, and Leib Sutcher raises concerns about a teacher shortage in California and makes recommendations for recruiting and retaining more teachers.

The report suggests that traditionally certified teachers are more effective than others. Throughout, the paper refers to non-traditional programs as “substandard” and teachers who go through alternative certification as “underprepared” and the “least well-prepared.”

This is a complicated area of research with evidence on both sides, and the report dutifully cites three different studies to support its claim that “the assignment of teachers who have not undergone preparation…has been found to harm student achievement.”

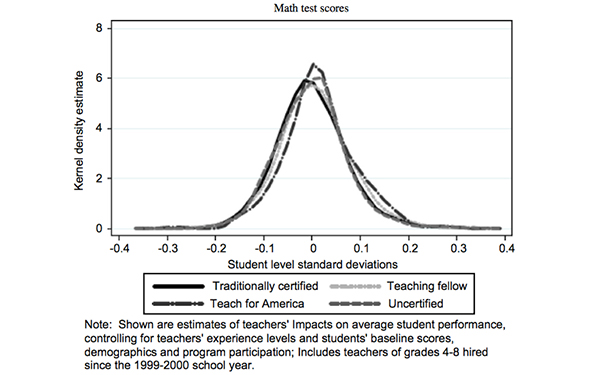

But the research is much more mixed than Darling-Hammond and coauthors let on.

Many rigorous studies have found that some teachers with significantly less training do about as well, and in a

few cases better than those who have gone through traditional and longer preparation.

Even studies that find traditionally trained teachers outperform others usually suggest the differences on average are fairly small and that there is much more variation within the same preparation pathway than between different ones.

Source: What does certification tell us about teacher effectiveness? Evidence from New York City, Economics of Education Review

The Learning Policy Institute’s response says the terms “substandard” and “underprepared,” are “not making a claim about effectiveness” but “there is also research demonstrating that less-than-fully prepared teachers are less effective.” Darling-Hammond cites several studies to support her claim and argues that some of the research I cite actually supports her position.

Fair enough — these studies should be taken seriously and we could go back and forth on this for a while.

But the research consensus seems to be that the difference in effectiveness between traditionally prepared teachers and alternatively certified ones is very small, to the extent it exists at all. According to Dan Goldhaber, a professor at the University of Washington Bothell and vice president for the American Institutes for Research, “There isn’t much evidence from the existing literature that differences in teacher preparation translate into significant differences in the outcomes of students.”

Jim Wyckoff a professor at the University of Virginia — whose research Darling-Hammond cites in her brief — told me in an email, “The overwhelming takeaway is that currently we have very little evidence on the efficacy of teacher preparation broadly defined. We know remarkably little about best practices. I see the debate as to whether alternative certification or traditional preparation is most effective as a distraction at best.”

Wyckoff adds, “What evidence exists on the horse race suggests that in the schools where we observe both [alternatively certified] and [traditionally prepared] teachers there appears to be small differences at best. The big result is that the [quality] distributions appear to largely overlap.”

In my view, it is a stretch to brand non-traditional California teaching routes as substandard, which I think strongly implies a negative judgement about effectiveness. Darling-Hammond and coauthors offer no state-specific evidence to support this sweeping claim.

Claims about pay incentives not backed up

In the same policy brief Darling-Hammond and co-authors are skeptical of teacher recruitment efforts through pay incentives. They write: “Some policies have emphasized monetary bonuses or ‘combat pay’ to attract teachers to high-needs schools. However, the evidence shows that investments in professional working conditions and supports for teacher learning are more effective than offering bonuses for teachers to go to dysfunctional schools that are structured to remain that way.”

But the authors do not cite any evidence that compares the efficacy of separate investments in working conditions versus pay.4 Goldhaber said, “I have never seen this kind of study done so it’s hard to justify [that] statement.”

No one doubts that working conditions matter — and in her response to me Darling-Hammond references several studies showing as much — but it’s not evident that investments in working conditions outweigh investments in pay, perhaps because it’s not entirely clear what specific policies will improve working conditions.

The authors do not mention a study that found paying teachers in high-poverty North Carolina schools small bonuses (about $2,500

5) had significant positive effects on retention.

Instead, the paper unfavorably cites research in which teachers were given $20,000 to transfer to a low-performing school. Most of the vacancies were filled and student achievement increased significantly

6 because of the pay incentive, a point which is not acknowledged in Darling-Hammond’s report. She and coauthors write, “Attrition rates of these teachers [who transferred schools] soared to 40 percent after the bonuses were paid out.”

This account is technically accurate, as Darling-Hammond and colleagues point out in the response to me: “Every aspect of this quotation is supported with the data … from the Transfer Incentives study, as is the broader finding that such incentives do not provide long-term solutions to the problems, as their benefits had disappeared by year two of the program.”

In my view, that’s quite misleading, though, as the original study says: “The transfer incentive had a positive impact on teacher-retention rates during the payout period; retention of the … teachers who transferred was similar to their counterparts in the fall immediately after the last payout.”

In other words, the incentive worked while it lasted, and once it ran out, teachers who had previously received it were no more likely to leave the classroom than their colleagues. And again, overall the program had a positive effect on student achievement while it was in effect.

—

None of this is to say that policies and positions advanced by Darling-Hammond are necessarily wrong. Maybe California does have a teacher shortage than can be addressed partially through improvements in working conditions; maybe New Orleans charter reforms were less successful than the test scores increases suggest; maybe alternatives to test-based accountability would have been preferable, and PISA scores would have risen.

Unfortunately, we’re not having a research-driven debate on these issues. Claims with a limited research base — or a hotly disputed one — are too often accepted based on expert opinion.

And in my view, Darling-Hammond avoids, misuses, or fails to fully engage with key research in some of her arguments.

Once again, readers can — and should — read Darling-Hammond and her colleagues response here.

Footnotes:

1. Darling-Hammond’s report cites another report, published by the Network for Public Education, which cites this unpublished NAEP study. The Network for Public Education brief was sharply critiqued by one researcher as providing “no evidence whatsoever about the effects of New Orleans’ reforms.”

2. It’s important to note that Harris’ full study, which finds large test score increases because of New Orleans reforms, was not published in full until very recently – after Darling-Hammond’s report. It will be important for researchers to examine his methods and discuss different ways to measure the effects of New Orleans’ reforms on student achievement.

3. See her comments in this video, beginning at around 7:30.

4. The authors point to evidence that monetary bonuses have not always generated enough interest from teachers to fill all open positions targeted by such programs. That may be so, but that does not address whether such pay incentive are a useful policy or a cost-effective use of resources.

5. The amount paid out was $1,800 per teacher in 2001. Adjusted for inflation, that’s $2,408 in 2015 dollars.

6. The achievement effects were positive and statistically significant in elementary schools, but not middle schools. The combined results were positive and statistically significant in years one and two in math, and year two in reading.

;)