Why Robots Are Not Effective Tools for Supporting Autistic People

Despite a push from investors, new research shows robot-human interaction does not deliver the help that autistic people need.

Get stories like this delivered straight to your inbox. Sign up for The 74 Newsletter

Even as the education technology industry rushes to develop robots that can deliver therapy to autistic children, research shows the devices are ineffective and unwanted, according to a new study released by researchers at the University of California Jacobs School of Engineering.

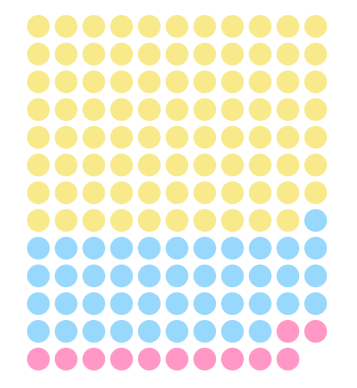

An autistic PhD candidate in computer science, Naba Rizvi is the lead author of an analysis of 142 papers published between 2016 and 2022 that focused on robots’ interactions with autistic people. She and her colleagues found that almost all of the research excludes the perspectives of the autistic subjects, pathologizes them by using an outdated understanding of the neurotype, and contains little, if any, evidence that therapies delivered by robots are effective.

More than 93% of the studies start with the now-controversial stance that autism is a condition that can and should be cured. Nearly all test the use of robots to diagnose the condition or to teach autistic children to interact in ways that make them seem more neurotypical, such as making eye contact.

While most research on human-robot interaction starts by asking the subjects what their needs are, nearly 90% of the researchers in Rizvi’s sample did not ask autistic people whether they want the technology. Fewer than 3% included autistic people in framing the theory being investigated, and just 5% incorporated their perspectives in designing research.

“Even clinicians are not convinced of their effectiveness, and minimal progress has been made in making such robots clinically useful,” Rizvi writes. “In fact, research even suggests that this use of robots may be counterproductive and negatively impact the skills they are designed to hone in autistic end-users.”

@scientistinpink #fyp #autism #actuallyautisic #autistic #neurodiversity #neurodiverse #heartbreakhigh #audhd #robots #robotics #ai #tech #ableism #ableist #science #womeninstem #womeninscience #technology #disability #disabled ♬ original sound – scientistinpink

Proponents reason that robots can not only deliver behavior therapy more cheaply but will appeal more to children than human therapists. Investors forecast the technology could become the centerpiece of a market that may soon be worth more than $3 billion. Not yet common in special education classrooms, robots programmed to intervene with autistic children are being marketed to schools and even families.

Some of the early research the robotics industry has recently relied on in designing its experiments described autistic children as less human than chimpanzees, Rizvi adds: “These systems promote the idea that autistic people are ‘deficient’ in their humanness, and that robots can teach them how to be more human-like. This echoes foundational work that has questioned the humanity of autistic people, and proposed non-human entities such as animals may be more human than them.”

Most of the research the team reviewed was published in robotics journals, not autism reviews. Seventy-six of the studies used anthropomorphic or humanoid robots to teach social skills, while 15 relied on devices designed to look like animals. One used a robot to diagnose “abnormal” social interactions.

Researchers leaned on harmful tropes that describe autistic people as robot-like — and robots as intrinsically autistic. Many of the papers reviewed also accept an old and controversial premise that autistic people are not motivated to interact socially with others. Less than 10% included representative samples of girls, whose autistic “behaviors” are more likely to show up as depression and other mental health conditions.

The report comes as a rift is widening between proponents of using behavioral therapy and autistic adults who say the intervention, commonly called applied behavior analysis, is inhumane. A growing body of research suggests that efforts to train autistic children to act and appear more like their neurotypical, or non-autistic, peers are ineffective and often traumatizing.

In applied behavior analysis, a therapist uses positive and negative reinforcement to attempt to “extinguish” mannerisms perceived as undesirable and to replace them with behaviors considered “normal.” Therapists work one-on-one with a child, often 10 to40 hours a week. It is repetitive and expensive.

Many autistic adults who have undergone the therapy note that some of the mannerisms it attempts to eliminate, such as hand-flapping or rocking, are harmless ways to compensate for overstimulation or to express positive emotions. Nonetheless, the therapy is widely considered the “gold standard” of autism interventions.

Rizvi says she’s dismayed but not surprised by the push to develop automated therapists. The use of robotics in medicine is exploding, and almost all of the researchers in her sample framed their work using what advocates call the “medical model” of disability. Historically, disabilities have been seen as medically diagnosable deficits to be treated or cured.

Over the past couple of decades, however, people with disabilities have increasingly pushed for the adoption of a “social model,” which holds that a lack of inclusion in all realms of public life is the central issue. Autistic adults have advocated for better representation in research, so that more studies are geared toward making education, employment, housing and other sectors of society more accommodating.

Just 6% of the papers Rizvi and her colleagues reviewed start from a social model. This is problematic, they say, because many autistic people have needs that can be addressed by improved technology. Non-verbal students, for example, benefit from evolving “augmentative and assistive communication” — devices families often struggle to get schools to provide.

Rizvi’s main research focus is on the development of ethical artificial intelligence. Because the datasets AI is “trained” on are inherently biased, so are the resulting algorithms, she explains. Research has shown, for example, that resumes that mention jobs in disability agencies or support capacities are automatically scored lower by AI than those that don’t.

Another example is AI-enabled online content moderation. Social media posts and comments that mention disability-related topics are often rejected as toxic, Rizvi says.

“When it comes to content moderation the data sets don’t always represent the perspectives of the communities,” she says. “And they do this thing where, say, if you have three people trying to agree on whether or not a sentence is ableist, the automatic assumption is that the majority vote is the right one.”

“Are Robots Ready to Deliver Autism Inclusion? A Critical Review” was presented at a recent Association of Computing Machinery conference. The presentation includes suggestions for ensuring research is inclusive and avoids harmful stereotypes and historical misrepresentations, which are also synopsized on Rizvi’s own website.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

;)