In 2010, then–U.S. Secretary of Education Arne Duncan promised to usher in the “next generation of assessments” that move “beyond the bubble tests.”

Duncan invested hundreds of millions1 of federal dollars into supporting the development of two sets of

Common Core–aligned tests — called PARCC

2 and Smarter Balanced — that are now in use in many states across the country.

The goals of these tests were ambitious. As Duncan put it, ”I am convinced that this new generation of state assessments will be an absolute game-changer in public education. For the first time, millions of schoolchildren, parents, and teachers will know if students are on-track for colleges and careers — and if they are ready to enter college without the need for remedial instruction. … For the first time, many teachers will have the state assessments they have longed for — tests of critical thinking skills and complex student learning that are not just fill-in-the-bubble tests of basic skills but support good teaching in the classroom.”

However, a number of states have dropped the federally supported tests and instead created their own version of Common Core-aligned assessments. By recent counts about half of the states that adopted Common Core are using either Smarter Balanced or PARCC; others are using home-brewed exams that purport to align to the standards.

So has Duncan’s vision come to pass? Here’s what we know about the new tests and how they’re different (and the same) as the ones they replaced:

-

Smarter Balanced and PARCC are pretty well aligned with the Common Core standards.

A new study by Nancy Doorey and Morgan Polikoff, released by the Fordham Institute, a pro–Common Core think tank, compares Smarter Balanced and PARCC to the Massachusetts state test — considered by some the gold standard of state assessments — and the ACT Aspire, a test used by three states and made by the company that also produces the college admissions exam, the ACT.3

The report finds that the new assessments generally do a better job, particularly in English, of emphasizing the most important content in the Common Core and requiring “the range of thinking skills, including higher order skills, called for by those standards.”

Whether this is a good thing depends on what you think of the Common Core and whether its emphasis on gathering evidence from the text and explaining mathematical thinking is worthwhile. But for states that are using one of the federally backed tests this is certainly good news, since academic standards and the tests used to assess them should be aligned.

As Polikoff put it, “Even if you're not a fan of Common Core, [it] is the set of standards that's in place in most states … We want those assessments to accurately assess what's in the standards."

-

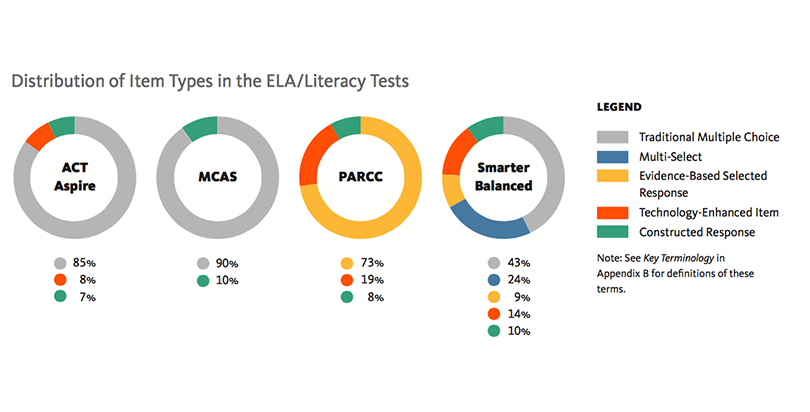

Smarter Balanced and PARCC have a lot more questions that aren’t multiple choice.

True to Duncan’s word, both sets of new assessments significantly reduce the reliance on multiple choice items as compared to the ACT Aspire and the Massachusetts state test. In fact, according to the Fordham study, most questions on both the PARCC and Smarter Balanced tests are not traditional multiple choice.

However, it’s not readily apparent just how different these new types of questions actually are. Some of them combine traditional multiple choice questions with questions where students have to cite sections of the text in their answer or they require the student to perform a task on a computer, such as dragging and dropping or highlighting text.

Check out sample questions from Smarter Balanced and

PARCC to judge for yourself.

-

Smarter Balanced and PARCC take a lot longer for students to complete.

Probably because of the inclusion of so many non-traditional questions — which are more time intensive — Smarter Balanced and PARCC take about five-and-a-half and seven-and-a- half hours, respectively. In contrast, both the ACT Aspire and Massachusetts exam take a little over three hours, according to the Fordham study.4

In response to complaints about length, PARCC shortened its test by an hour and a half, though at six hours it’s still significantly longer than the ACT Aspire and Massachusetts test. Meanwhile New York recently

announced that its Common Core-aligned assessments would be untimed. This highlights a key trade off in test design: more in-depth, challenging tasks mean longer test times. But state officials may be damned if they do and damned if they don’t since members of the growing opt-out movement have complainted about both too many multiple choice questions and that the tests take too long.

-

Smarter Balanced and PARCC both have some quality issues to work out.

Although the Fordham report gives the tests high marks on alignment with the Common Core, the study also raises some pointed questions about basic quality issues with the exams.

On the math tests, reviewers gave the ACT Aspire and Massachusetts exam higher marks on overall quality than both Smarter Balanced and PARCC. A small number of questions on those exams were difficult to read or inaccurate. In a few cases, questions appeared to have more than one right possible answer, the Fordham report notes.

On the English tests, ACT Aspire, the Massachusetts state test, and PARCC all got top marks, but Smarter Balanced got a lower rating because of instances of spelling errors and questions with more than one potentially correct answer.

The report notes, ”Although this concern applies to a small percentage of items, the review panels expressed the need that a very high bar be set on the quality of items used on consequential tests.”

-

Next generation assessments are more likely to be completed on a computer — but that’s led to some major problems.

Students now take Smarter Balanced, PARCC and a number of state tests on a computer, with the hope that the technology will allow for more item variety, fewer security issues, and quicker turnaround of results.5

But this ideal has run into numerous challenges as states across the country — including Indiana,

Tennessee,

Montana,

Wisconsin, and

Nevada, among

others — have struggled to get technology-based testing off the ground.

Smarter Balanced and PARCC also have paper-and-pencil versions of their tests that are supposed to be used if a computer-based option is not feasible. But a recent report from Education Week showed that PARCC scores were significantly lower for students who took the exam by computer, raising questions about whether the two versions were equivalent. Some

research has found that students less familiar with technology don’t perform as well on computer-based exams.

-

PARCC predicts college success at a similar rate as the Massachusetts state test and the SAT.

A 2015 study found that PARCC, the Massachusetts state exam, and the SAT all were modestly predictive of first-year grades in college; none stood out as better or worse.

6

On the one hand, this finding suggests that PARCC is no better — at least in terms of assessing college readiness — than the test Massachusetts was already using. On the other, the Massachusetts exam has long been seen as a high-quality test, so for PARCC to be viewed as equally good can be portrayed as positive news. Of course, predicting first-year grades may not be the only way to judge the quality of state exams; for instance, there is also alignment with state standards.

For what it’s worth, officials in Massachusetts decided to adopt a hybrid exam that includes aspects of both PARCC and its existing state test.

-

PARCC and Smarter Balanced may help better distinguish between more and less effective teaching.

A new study7, released by Harvard’s Center for Education Policy Research, found that PARCC and Smarter Balanced tests had more “instructional sensitivity” than previous tests used in several states.

8 What this means is that there was more fine-tuned variation in teachers’ impacts on how students scored on the Smart Balanced and PARCC tests than past state tests. This suggests that the new assessments may be better suited for use in

teacher evaluation.

-

Some teachers say the new tests are better — but most don’t want them to count in teacher evaluation.

The National Network of State Teachers of the Year, which supports Common Core, assembled a panel of top teachers to compare PARCC and Smarter Balance to previous state tests.

9 The teachers overwhelmingly believed that the new tests were better indicators of quality teaching and learning than the old ones. However, the Harvard

study, mentioned earlier, found that teachers in several states only felt moderately prepared to teach students what they needed to know for the Smarter Balanced or PARCC assessments.

Teachers are skeptical of having the new tests count towards their evaluation: a Gallup poll reported that eighty 80 percent did not want new Common Core test scores ever linked to their evaluations. Still, the Harvard study found students scored higher on math tests when they were connected to their teacher’s evaluation.

10

-

There’s still a lot to learn about these new assessments.

One of the most important unanswered questions is how PARCC and Smarter Balanced compare to the many state assessments that have been redesigned to align with the Common Core. There just isn’t much evidence on whether these tests are Common Core–aligned in name only — like many textbooks — or are actually doing a good job assessing the skills in the standards.

It’s also not clear how to think about some of the tradeoffs and purported benefits of these new tests. Is the extra time required worth it? How valuable is the is the ability to compare student test scores across states, even as many states have dropped out? Will the technological glitches eventually work themselves out?

And perhaps the key unknown question is a political one: Will any new states adopt the Smarter Balanced or PARCC — or will any more leave?

Footnotes:

1. Still, tests account for only a small fraction — just $27 per student according to a 2012 study — of education spending nationally. (return to story)

2. PARCC stands for Partnership for Assessment of Readiness for College and Career. (return to story)

3. It’s important to note that in an appendix to the report, responses from all four testing groups indicate ongoing efforts to improve the tests, including in response to finding from the Fordham study. (return to story)

4. These estimates are based on the average time to complete both the math and English sections for each per, in grades 5–8. (return to story)

5. Another potential benefit of computer-based assessments is that they can be “adaptive” (as opposed to “fixed form”). This means that test changes difficulty based on students’ performance, measured by how they do on previous questions. The idea is that the questions will be at an appropriate level to challenge students, and can measure growth of both high- and low-achieving students. Smarter Balanced’s summative assessment is computer adaptive, but PARCC’s isn’t. (return to story)

6. The study has a significant limitation, however, in that it is based on administering each test to first-year college students and then looking at how well those students’ scores correlated with their GPA. Ideally, the study would look at how well test performance in high school correlated with success in college. But because such a study would take a significantly longer time — multiple years — to conduct, the researchers used this less ideal, but still informative approach. (return to story)

7. Disclosure: This study was funded in part by Bloomberg Philanthropies, which is also a funder of The Seventy Four. (return to story)

8. The states studied were Delaware, Massachusetts, Maryland, New Mexico, and Nevada. (return to story)

9. Specifically, the state tests examined were those of New Hampshire, Delaware, Illinois, and New Jersey. (return to story)

10. The study is correlational, so it can’t be assumed that linking test scores to teacher evaluation had a causal effect on student test scores. (return to story)

;)