Fact-Check: Hey Education Pundits, Stop Writing Early Obituaries for School Improvement Grants

The Fact-Check is The Seventy Four’s ongoing series that examines the ways in which journalists, politicians and leaders misuse or misrepresent education data and research. See our complete Fact-Check archive.

Conventional wisdom among journalists and education wonks is that School Improvement Grants (SIGs) simply did not work.

The program, which was part of the federal economic stimulus and designed to help turn around struggling schools, has had many none-too-flattering post-mortems written about it. The latest comes from Politico, which declares that the $7 billion initiative “didn’t help America’s worst schools.” This is one in a long line of such proclamations.

SIG’s skeptic-in-chief is Andy Smarick, a partner at Bellwether Education Partners. (Smarick has written for the Seventy Four and Bellwether’s co-founder Andy Rotherham sits on The Seventy Four’s board of directors.) Smarick has waged a relentless one-man crusade against the program, calling it “disappointing,” “heartbreaking,” a “disaster,” a “tragedy,” and “the greatest failure in the U.S. Department of Education’s 30-plus year history.”

His skepticism appears widely held. In 2013 when the U.S. Department of Education released test results from schools receiving a grant, news coverage called the results “mixed” and highlighted the minority of schools that had seen achievement fall.

With such dire and wide-spread proclamations of failure, the evidence against SIG must be overwhelming, right?

Not so much.

In fact, two rigorous studies of the program have shown positive results — which unfortunately rarely make it into news stories.

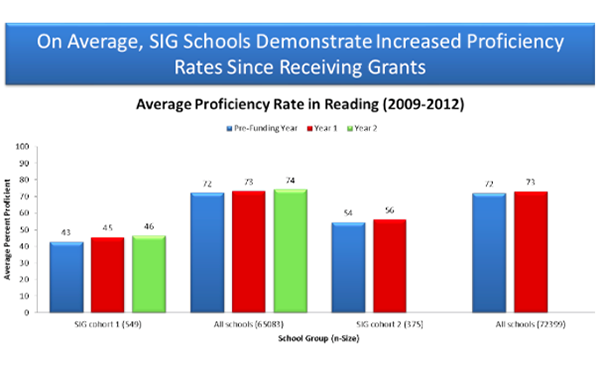

So how did it become so commonplace to assume that SIG did not work? Mostly because people are misusing the data. Take this graph, showing only small proficiency rate increases for SIG recipients, which is part of the supposedly damning evidence that many rely on.

Photo: Courtesy the Thomas B. Fordham Institute

But Stanford professor Tom Dee says using data in this way is just wrong. “I don’t think anyone who takes policy and program evaluation seriously would consider that type of descriptive evidence meaningful in terms of understanding the true impact of the program,” he said in an interview.

Or as Shanker Institute senior research fellow Matthew DiCarlo put it in a blog post on the misuse of SIG evidence, “This breaks almost every rule of testing data interpretation and policy analysis.”

The reason is that this sort of eye-ball test cannot isolate the impact of SIGs. We simply don’t know what scores would have looked like in the absence of the program.

That’s why rigorous analyses that focus exclusively on the impact of SIGs are necessary. When Dee ran one such careful analysis of SIG schools in California — comparing schools that were eligible for a grant to similarly low-performing ones that weren’t — he found positive results overall. And despite headlines emphasizing the costs of program — $7 billion!!! — Dee showed that SIG was relatively cost effective compared to class-size reductions.

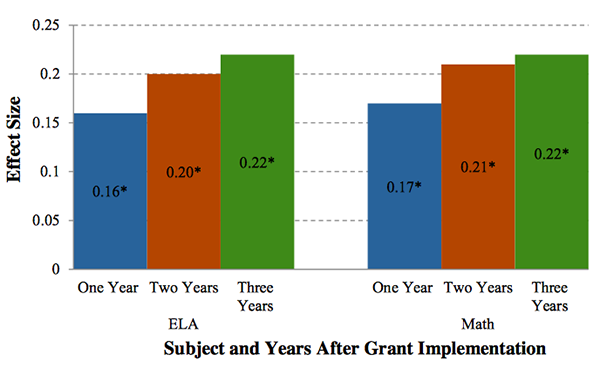

Another study of SIG recipients in Massachusetts by the American Institute of Research found statistically significant achievement gains in all three years of the program across both math and English. The effect sizes were fairly large — on the lower end of estimates of the impacts of New Orleans’ charter school reforms. (On the other hand, the research found that SIG had no impact on student attendance.)

Photo: Courtesy the American Institues for Research

None of this is to say that SIG has clearly been a success. We don’t have much evidence from states other than Massachusetts and California, and it’s certainly possible — even likely — that the program was successful in some places and less successful in others, as the recent Politico story argued. Indeed, some reports suggest that implementation of the program has been difficult. More rigorous research is in the pipeline, so time will tell.

For now, though, confident declarations are getting far ahead of existing evidence.

It’s also plausible that the large amount of money dedicated to SIG could have been used more efficiently for other purposes; it’s difficult to know.

To some extent, the misinterpretation of SIG evidence is the Department of Education being foisted by its own overheated rhetoric. In announcing the program in 2009, Secretary of Education Arne Duncan declared, “We want transformation, not tinkering.” Although the gains in the Massachusetts and California studies are real and important, it would be hard to call them transformational.

But maybe a program that — at least in some places — resulted in steady, meaningful progress is worth celebrating, not condemning.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

;)