‘Distrust, Detection & Discipline:’ New Data Reveals Teachers’ ChatGPT Crackdown

Survey shows that as students increasingly turn to generative AI for schoolwork, educators grow more wary of their pupils’ academic integrity.

Get stories like this delivered straight to your inbox. Sign up for The 74 Newsletter

New survey data puts hard numbers behind the steep rise of ChatGPT and other generative AI chatbots in America’s classrooms — and reveals a big spike in student discipline as a result.

As artificial intelligence tools become more common in schools, most teachers say their districts have adopted guidance and training for both educators and students, according to a new, nationally representative survey by the nonprofit Center for Democracy and Technology. What this guidance lacks, however, are clear instructions on how teachers should respond if they suspect a student used generative AI to cheat.

“Though there has been positive movement, schools are still grappling with how to effectively implement generative AI in the classroom — making this a critical moment for school officials to put appropriate guardrails in place to ensure that irresponsible use of this technology by teachers and students does not become entrenched,” report co-authors Maddy Dwyer and Elizabeth Laird write.

Among the middle and high school teachers who responded to the online survey, which was conducted in November and December, 60% said their schools permit the use of generative AI for schoolwork — double the number who said the same just five months earlier on a similar survey. And while a resounding 80% of educators said they have received formal training about the tools, including on how to incorporate generative AI into assignments, just 28% said they’ve received instruction on how to respond if they suspect a student has used ChatGPT to cheat.

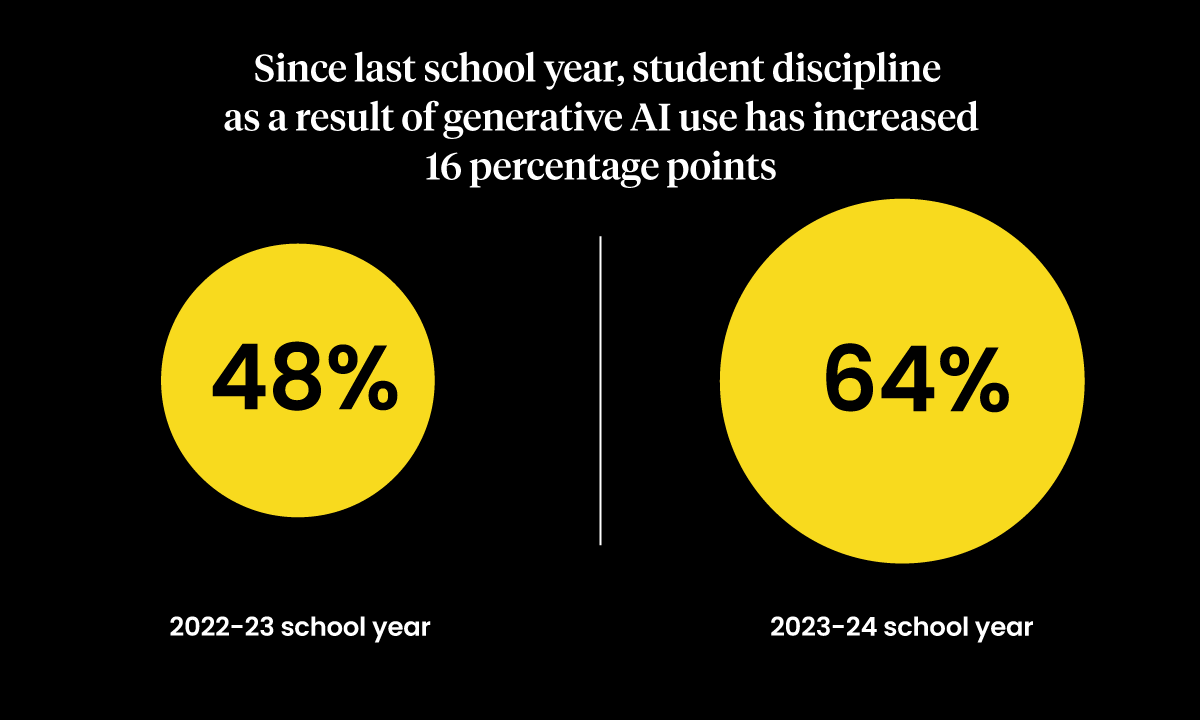

That doesn’t mean, however, that students aren’t getting into trouble. Among survey respondents, 64% said they were aware of students who were disciplined or faced some form of consequences — including not receiving credit for an assignment — for using generative AI on a school assignment. That represents a 16 percentage-point increase from August.

The tools have also affected how educators view their students, with more than half saying they’ve grown distrustful of whether their students’ work is actually theirs.

Fighting fire with fire, a growing share of teachers say they rely on digital detection tools to sniff out students who may have used generative AI to plagiarize. Sixty-eight percent of teachers — and 76% of licensed special education teachers — said they turn to generative AI content detection tools to determine whether students’ work is actually their own.

The findings carry significant equity concerns for students with disabilities, researchers concluded, especially in the face of research suggesting that such detection tools are ineffective.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

;)