Beyond the Scantron: Back-to-School 2020 Should Start With Assessments to Determine How COVID-19 Shutdown Impacted Student Achievement, Says Former Massachusetts Ed Leader

This piece is part of “Beyond the Scantron: Tests and Equity in Today’s Schools,” a three-week series produced in collaboration with the George W. Bush Institute to examine key elements of high-quality exams and illuminate why using them well and consistently matters to equitably serve all students. Read all the pieces in this series as they are published here. Read our previous accountability series here.

Jeffrey Nellhaus served as deputy commissioner of education in Massachusetts, a state that has led in developing high academic standards, assessing students to determine whether they are meeting them, and acting upon those results to improve student achievement. Nellhaus also served as chief of assessment for PARCC, Inc., a technical education advisor to states like New Hampshire, and is a board member for a Washington, D.C. charter school.

The former Peace Corp volunteer spoke with us about how state tests help identify student needs and improve education programs. He emphasized how validity, reliability and feasibility are central to good testing programs.

How would you describe the role that state assessments play in instruction and learning? And what role do they play in policy and accountability?

The purpose of state tests is to determine the extent to which students are achieving the state’s academic standards. The results of the tests are used by educators to inform improvements in instruction and as a primary indicator of effectiveness in school and district accountability systems.

Releasing questions from state tests help teachers, students, and parents understand the meaning of standards by showing how they manifest in real problems. In Massachusetts, we also published students’ written responses to essay questions and extended math problems so that teachers could see proficient answers. We also provided schools an “item analysis report,” which showed how each student scored on each test question.

All of this took the guessing out of what should be taught and learned – and helped foster public acceptance of the assessments. Detailed reports of how various groups of students were performing, as required by No Child Left Behind, also highlighted achievement gaps so that assistance could be targeted to where help was most needed.

What role does the National Assessment of Educational Progress play?

NAEP provides data that allows you to compare results by state and track trends over time.

I would argue that NAEP has played an important role in improving the quality of state tests. It paved the way for states to measure higher order skills by including questions on their tests that required written responses. NAEP also provided a model for states to report results using achievement levels: basic, proficient, and advanced. Proficient was defined by NAEP as a strong command of the subject matter and the ability to solve problems. A relatively high standard that, to this day, less than half of students in the nation are meeting, but a standard students need to achieve in order to succeed in college and today’s workforce.

When states first began to report the results of their assessments using achievement levels, they chose to adopt less rigorous definitions of proficiency than NAEP. Some states reported as many as 70 percent or more of their students performing at the proficient level. Over time, business leaders, policy makers and educators in those states noticed the discrepancy. NAEP held their feet to the fire and, in most cases, states raised their standards.

What role do cut scores play in determining proficiency levels? Who determines those scores?

Cut scores refer to the minimum score on a test associated with a given achievement level. They are determined by committees of educators and other parties with subject area knowledge in what is called a standard setting process that takes two to three days.

There are two basic approaches to the standard setting process: a test-based approach and an examinee-based approach. Broadly speaking, for the test-based approach participants judge which of the questions on the tests students at each achievement level should be able to answer correctly. For the examinee-based approach, participants examine a wide range of profiles of students’ responses to both essay and multiple-choice questions and judge which of the profiles are best associated with each achievement level.

In each approach, panelists are required to make their judgments on the basis of the state’s achievement level definitions, not on personal notions they may hold. Standard setting committees typically include eight to 12 participants per subject/grade and involve several rounds of judgements. There’s more to it, but in the end, individual participants’ judgements are aggregated to generate the cut scores for each achievement level.

Ultimately, the work of the committees is shared with the state’s chief state school officer and/or state board of education for adoption.

Did you ever face pressure to lower your standards during your time in Massachusetts?

In Massachusetts, we based the names and definitions of our achievement levels on those used by NAEP. There was little push-back other than adopting the names used by NAEP for their first two levels: “Below Basic” and “Basic.” Instead, the Board of Education adopted the names “Failing” and “Needs Improvement” for the first two levels, keeping the NAEP names “Proficient” and “Advanced” for the third and fourth levels. Their thinking was to send a clear message that proficiency was the goal and that a name like “Basic” might be construed as good enough.

At the high school level, however, the Massachusetts’ Education Reform Law required students to pass the state’s high school tests as a criterion for graduating from high school. The Board of Education had to determine which achievement level would be passing. This, as you can imagine, was very controversial, with some educators opposing the requirement altogether, believing it would cause many disadvantaged students to drop-out.

The requirement held thanks to strong support of the business community, the governor, key legislators, urban superintendents and the commissioner of education. In the first few years the high school requirement was implemented, the legislature provided grants to schools to assist districts with high numbers of students not passing the tests on their first attempt. The funding was critical to improving instruction and providing other student supports. As a result, dropout rates declined for the first class of students held to account.

A lot of the conversations about testing can be very nuanced and technical. What do you wish that parents and teachers better understood about tests?

Test literacy requires an understanding of and the interplay among three concepts: Validity, reliability and feasibility.

A test is valid if it measures what is claimed to be measured. In the case of state assessments, the claim is that the tests are measuring performance on the state’s content standards. But test results are used for various purposes, so validity comes in different forms.

A state test has content validity if the items and tasks on the test are aligned to one or more of the state’s content standards. States often convene committees of teachers to review test questions before they appear on tests to confirm they are aligned to the standards, grade level appropriate and free of bias.

There is also construct validity, also referred to as overall validity. For a state test to have overall validity, the questions must not only be aligned to standards, but also representative of the full range of the standards.

An example of a test lacking overall validity might be a driving test where the examinee is never asked to make a left-hand turn in an intersection against oncoming traffic. To establish overall validity, state education officials work with their testing vendors to map out how the standards will be covered and publish that information in test frameworks or blueprints.

College entrance exams like the SAT and ACT claim to have predictive validity. They establish predictive validity with research studies that show the likelihood students with various scores will succeed in entry-level college courses.

A test is considered to be reliable if it generates results that are stable and precise. Reliability is a function of the number and quality of questions on a test. A test with relatively few, poorly designed questions will not be reliable. That means that state tests, designed to measure student performance on a large amount of content, may require several class periods.

Test accommodations for students with disabilities and English learners, reasonable testing times, and testing environments free of interruptions and distractions all add to test validity and reliability.

The feasibility of a test is primarily a function of the amount of time it takes to administer and the cost. Tests that are too long and too costly don’t endure.

So, in any discussion about testing keep validity, reliability and feasibility in mind. When someone argues for a shorter testing time, you need to ask whether validity and reliability will be compromised. On the other hand, if someone proposes that the state replace its current tests with portfolios, you need to ask whether it’s feasible, given the amount of training and oversight it would take to establish reliability.

As schools begin the 2020-21 school year in the midst of COVID-19, how should schools and districts consider using assessment to help students succeed academically?

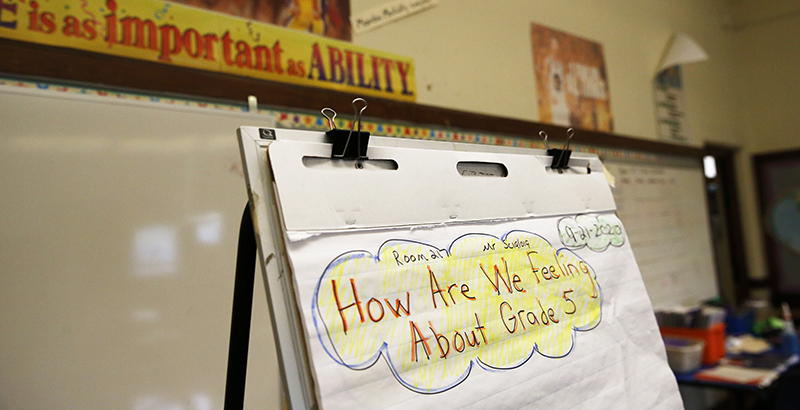

It will be critical for schools to conduct assessments as we begin the 2020-21 school year to determine how much last spring’s disrupted schooling impacted student achievement.

Districts that have access to assessments aligned to state standards — like interim assessments or pools of released state test questions — will be able to hit the road running.

The assessments will likely indicate learning loss/gaps. Schools may want to take the first few weeks helping close them, but the goal should be to begin instruction on current grade level material as quickly as possible, integrating instruction on prior year subject matter as needed. Ongoing use of locally developed or commercially available assessments to monitor progress periodically throughout the year will be even more critical this year than ever.

Holly Kuzmich is executive director of the George W. Bush Institute.

Anne Wicks is the Ann Kimball Johnson Director of the George W. Bush Institute’s Education Reform Initiative.

William McKenzie is senior editorial advisor at the George W. Bush Institute.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

;)